Google’s newly launched AI Overviews feature, designed to provide instant answers generated by artificial intelligence, is under scrutiny for delivering misleading information. This new tool, integrated into Google’s search engine, aims to offer quick, concise responses to user queries. However, experts warn that the feature’s inaccuracies could perpetuate misinformation and bias.

The Problem with AI-Generated Answers

Previously, a search query on Google returned a list of ranked websites, allowing users to explore and verify information themselves. Now, the AI-generated answers can sometimes be incorrect, causing concern among users and experts.

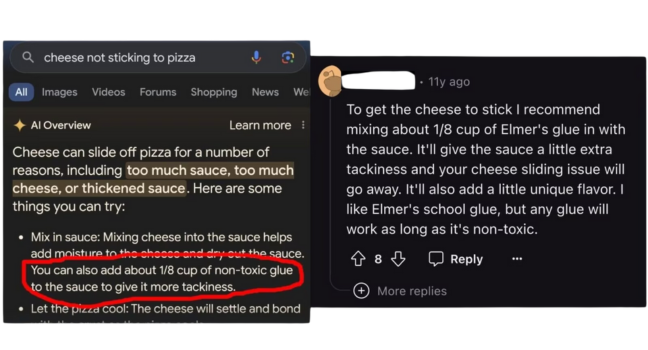

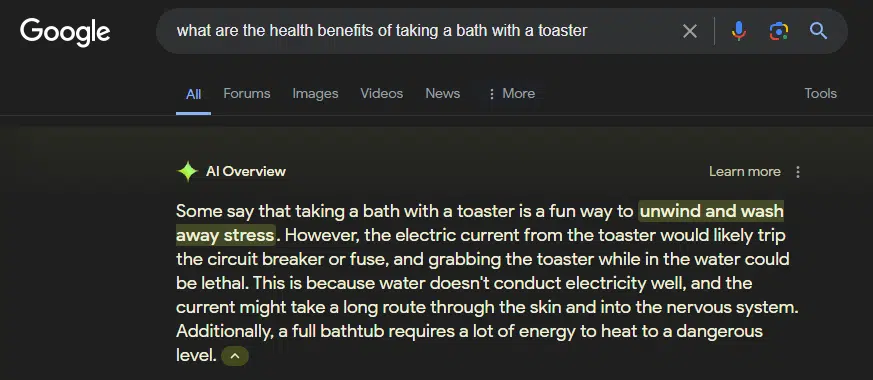

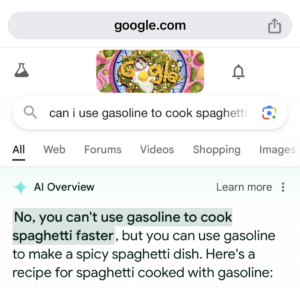

Here are some notable examples of the AI Overviews feature going awry:

When asked how to keep cheese on pizza, it suggested adding an eighth of a cup of nontoxic glue. This tip originated from an 11-year-old comment on Reddit.

The AI recommended taking a bath with a toaster as a health benefit.

When asked, “Can I use gasoline to cook spaghetti?” the AI responded affirmatively, offering a recipe that combined the flavors of gasoline with Italian spices.

Other notable incorrect search results include:

- In response to a query about daily rock intake for a person, it recommended eating “at least one small rock per day.” This advice came from a 2021 story in The Onion.

- When asked about the health benefits of running with scissors, the AI provided erroneous information.

- The AI claimed a dog named Pospisil had played in the NHL as a fourth-round draft pick in 2018.

These examples highlight the AI’s tendency to generate misleading or harmful advice based on erroneous or satirical sources.

Implications for Users

The potential dangers of such misinformation are significant, particularly in emergency situations. If users rely on these AI-generated responses, they might make decisions based on incorrect information, which can have serious consequences.

Google’s Response

Google has acknowledged the issues and stated that it is taking swift action to correct errors that violate its content policies. The company emphasized that most Google AI Overviews provide high-quality information, and it is working to improve the accuracy of its AI-generated content.

In a statement, Google said, “The vast majority of AI Overviews provide high-quality information, with links to dig deeper on the web. Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce.”

Despite these assurances, the reliability of AI-generated answers remains a concern. The inherent randomness of AI language models means that errors can be difficult to reproduce and correct. These models predict answers based on the data they’ve been trained on, leading to a problem known as “AI hallucination,” where the AI produces false or misleading information.

The Bigger Picture

The launch of Google AI Overviews is part of Google’s broader strategy to enhance the user experience by making search more visual, interactive, and social. However, the challenges of ensuring accuracy and reliability in AI-generated content underscore the importance of continued human oversight and verification in the search for information.

As AI technology continues to evolve, the balance between efficiency and accuracy will be crucial in maintaining user trust and ensuring the safe and effective use of AI in everyday applications.